Artificial intelligence (AI) technologies have evolved rapidly over the past decade, influencing sectors from healthcare and finance to education and security. Recognizing both the tremendous potential and the significant risks associated with AI, the European Union (EU) has taken pioneering steps to regulate AI development and deployment. Central to this effort is the Artificial Intelligence Act (AI Act), a landmark piece of legislation aimed at ensuring AI technologies are trustworthy, human-centric, and aligned with European values of transparency, accountability, and fundamental rights. Together with existing EU standards, the AI Act seeks to create a robust regulatory framework that governs the ethical and safe development of AI across member states.

EU Standards: A Foundation for Trustworthy AI

The European Union has historically set high regulatory standards in sectors such as consumer protection, data privacy (e.g., GDPR), and product safety. With AI, the EU aims to maintain its leadership by establishing clear, enforceable standards that guide the safe and ethical use of emerging technologies.

Key principles underlying EU standards for AI include:

- Human agency and oversight: Ensuring that humans remain in control of AI systems.

- Technical robustness and safety: Guaranteeing that AI systems are secure, resilient, and function reliably.

- Privacy and data governance: Safeguarding data rights and ensuring data is collected and used lawfully.

- Transparency: Making AI operations explainable and understandable to users.

- Diversity, non-discrimination, and fairness: Preventing bias and discrimination within AI models.

- Accountability: Defining responsibility for AI decisions and ensuring redress mechanisms are in place.

Several standardization initiatives complement the AI Act, such as efforts by the European Committee for Standardization (CEN) and the European Telecommunications Standards Institute (ETSI) to create technical standards for AI quality, safety, and conformity.

The Artificial Intelligence Act (AI Act)

Background

Proposed by the European Commission in April 2021, the Artificial Intelligence Act is the first comprehensive attempt globally to regulate AI holistically. Its goal is to create a single legal framework for AI that harmonizes rules across the EU, ensuring both the safety of AI applications and the protection of fundamental rights.

Scope and Objectives

The AI Act covers:

- The development, marketing, and use of AI systems within the EU.

- AI systems used by EU-based providers or impacting EU citizens, even if developed abroad.

Its main objectives are:

- To ensure that AI systems placed on the EU market are safe and respect existing laws.

- To foster investment and innovation in trustworthy AI.

- To enhance governance and enforcement of existing fundamental rights and safety laws.

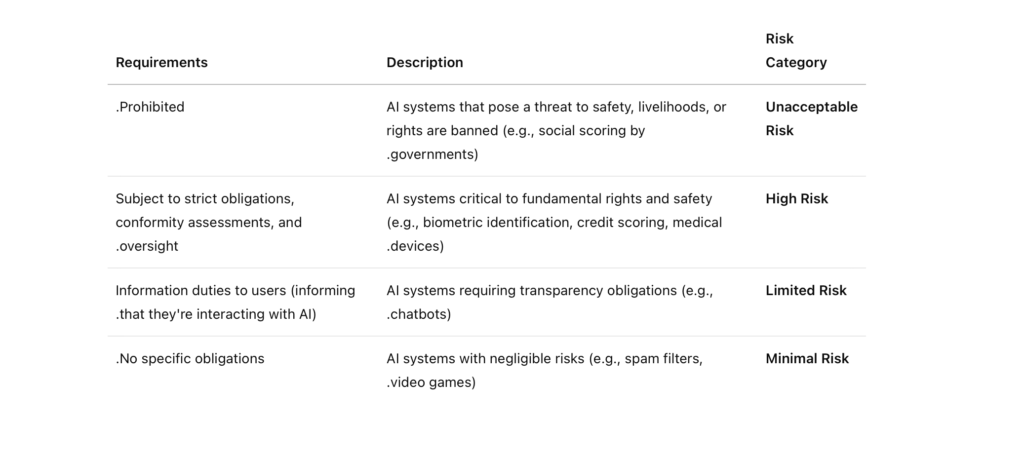

Risk-Based Approach

The AI Act introduces a risk-based categorization of AI systems:

Obligations for High-Risk AI Systems

Developers and users of high-risk AI systems must:

- Implement a risk management system.

- Ensure high-quality datasets to minimize biases.

- Maintain technical documentation and logging capabilities.

- Ensure human oversight throughout AI system operation.

- Provide clear instructions for use.

- Register the system in an EU database for high-risk AI.

Governance and Enforcement

- European Artificial Intelligence Board (EAIB): A new body responsible for facilitating consistent enforcement across member states.

- National supervisory authorities: Each member state must establish a body to monitor AI compliance.

- Penalties: Non-compliance can result in fines up to €30 million or 6% of global annual turnover, whichever is higher.

Challenges and Criticisms

While the AI Act is a groundbreaking initiative, it has faced criticism from various stakeholders:

- Innovation Concerns: Tech companies argue that strict regulations could stifle innovation and create excessive burdens for startups.

- Definition Ambiguity: Critics argue that the broad definition of AI in the Act might capture too many systems unnecessarily.

- Enforcement Complexity: Ensuring consistent application across diverse member states with varying technological maturity is a major challenge.

- Civil Rights Concerns: Some NGOs argue that the AI Act does not go far enough in banning harmful AI applications, such as biometric mass surveillance.

Future Outlook

As of early 2025, the AI Act has gone through revisions and negotiations between the European Parliament, the Council, and the Commission (the so-called “trilogues”). Final adoption is expected imminently, with gradual implementation timelines based on risk categories.

Once fully operational, the AI Act is expected to:

- Set a global precedent for AI governance, much like the GDPR did for data protection.

- Encourage innovation aligned with ethical standards.

- Provide legal certainty to developers and users of AI systems.

- Serve as a model for countries and regions seeking to regulate AI responsibly.

Moreover, the AI Act is complemented by upcoming initiatives, such as the AI Liability Directive, which aims to establish clearer liability rules for damages caused by AI systems.

Conclusion

The European Union’s approach to regulating AI through rigorous standards and the pioneering Artificial Intelligence Act represents a historic moment in technological governance. By emphasizing a human-centric, risk-based, and rights-focused framework, the EU is setting the foundation for an AI ecosystem that balances innovation with ethical responsibility. While challenges remain in enforcement and flexibility, the AI Act offers a visionary path forward for regulating one of the most transformative technologies of the 21st century.

As AI continues to shape societies, the European model may influence global regulatory trends, ensuring that AI technologies serve humanity, rather than threaten its core values.